Скачать с ютуб Building a hybrid data pipeline using Kafka and Confluent в хорошем качестве

Из-за периодической блокировки нашего сайта РКН сервисами, просим воспользоваться резервным адресом:

Загрузить через dTub.ru Загрузить через ClipSaver.ruСкачать бесплатно Building a hybrid data pipeline using Kafka and Confluent в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно Building a hybrid data pipeline using Kafka and Confluent или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон Building a hybrid data pipeline using Kafka and Confluent в формате MP3:

Роботам не доступно скачивание файлов. Если вы считаете что это ошибочное сообщение - попробуйте зайти на сайт через браузер google chrome или mozilla firefox. Если сообщение не исчезает - напишите о проблеме в обратную связь. Спасибо.

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

Building a hybrid data pipeline using Kafka and Confluent

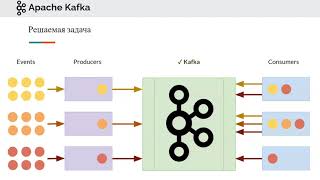

Building a data pipeline on Google Cloud is one of the most common things customers do. Increasingly, customers want to build these data pipelines across hybrid infrastructures. Using Apache Kafka as a way to stream data across hybrid and multi-region infrastructures is a common pattern to create a consistent data fabric. Using Kafka and Confluent allows customers to integrate legacy systems and Google services like BigQuery and Dataflow in real time. Learn how to build a robust, extensible data pipeline starting on-premises by streaming data from legacy systems into Kafka using the Kafka Connect framework. This session highlights how to easily replicate streaming data from an on-premises Kafka cluster to Google Cloud Kafka cluster. Doing this integrates legacy applications and analytics in the cloud, using different Google services like AI Platform, AutoML, and BigQuery. Speakers: Sarthak Gangopadhyay, Josh Treichel Watch more: Google Cloud Next ’20: OnAir → https://goo.gle/next2020 Subscribe to the GCP Channel → https://goo.gle/GCP #GoogleCloudNext DA211 event: Google Cloud Next 2020; re_ty: Publish; product: Cloud - Data Analytics - Dataflow, Cloud - Data Analytics - BigQuery; fullname: Sarthak Gangopadhyay, Josh Treichel;